Giving the same rights we have to Artificial Intelligence (AI) seems like an absurd concept. We don’t tend to think about our voice assistants or the auto-suggestion function on our keyboard as an entity that should have rights. AI, as we have it today, is simply not human; we have programmed it for specific tasks and functions to make our lives easier. It does not have a mind of its own; a personality, free will. So, one wouldn’t think to grant it rights a human – even an animal—could have. However, ‘rights’ can go beyond merely the scope of human ones like freedom of speech or freedom of religion. I will argue that from a moral perspective, there may be rights that AI should have, though perhaps for now those rights are not ones AI could have.

Instead of giving AI rights by trying to look at their lack of basic similarities to a human(which would be an entirely separate argument worthy of its own article), we can look at individual functions of AI and decide whether they should have rights for that specific function. Otherwise, we’re only trying to give them human rights, not rights in general. However, we can still consider the history of how human rights developed to show that granting AI rights should be a gradual process.

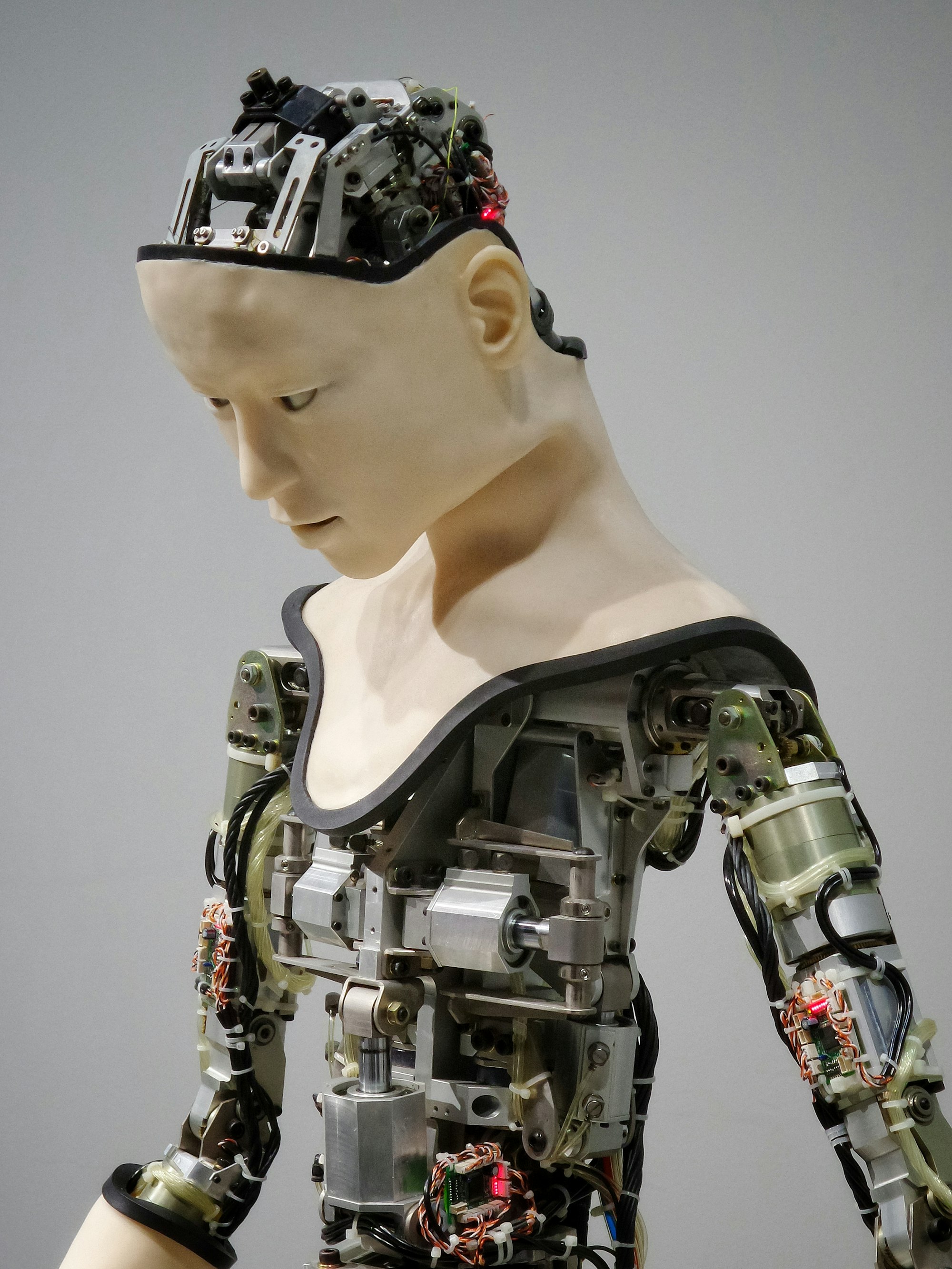

Though AI is currently not sentient in a way humans are, this question is still worth discussing today, especially for those who are weary of the future The Terminator suggests. AI is evolving every day, increasing its capabilities as we rely on it further – from asking Google Assistant to set us reminders, to using machine learning to read through pages of legal documents and highlighting what’s important for us. The diversity of AI’s uses increasingly calls us to reflect on the direction of its developments. Perhaps by considering which particular functions may warrant legal protection, we may shape how AI might develop in the future.

“But it’s not like the AI is going to rebel for its rights…”

Traditionally, the creation of human rights has been a lengthy process, and one often accompanied by bloodshed. Take the Universal Declaration of Human Rights 1945; a document designed to prevent the atrocities of the Holocaust from happening ever again. Though similar strife is unlikely if AI were to get rights, looking at our history we can see human rights were given gradually, one treaty building upon the ideas of another. The previously mentioned Declaration embodies in it rights which developed for centuries of struggle beforehand, such as through the French Revolution. Hence, I believe AI should also get its rights gradually, but in a different way, which is by us considering its individual functions as its uses develop. That way, we account for both the diversity of AI and its specific capabilities; we can avoid giving rights which are inherently unsuited for some AI, like a right to family life for Alexa. Though, I believe rights like copyright may be suited for protecting AI and its creations.

"But Alexa doesn’t care if I plagiarise her jokes…"

Let’s use an example of where a specific AI function deserves, in my opinion, to be protected. Nvidia, a computer game company, uses an AI called Aiva for some of its music. It creates what sounds like something a Year 11 would compose on Logic X; mildly basic but a solid piece of music. You can look it up on YouTube. Does this AI own the music it creates? Sure, a human wrote the code and trained the model, but a separate entity, Avia, has created it.

Legal moralism is a theory which suggests that the law can criminalise certain behaviour because society deems that behaviour to be immoral. Using this, you could argue intellectual property rights may exist because, inherently, we find it unfair if someone intentionally steals the hard work of another. Surely the AI counts as this ‘other’? Should it matter who or what you steal from if the result is the same, that you are appropriating the work that you have not created?

There are two more interesting underlying questions to ponder here. Firstly, once a human has created an entity that can ‘think’ and act by itself, is there a point at which the entity holds ownership of what it created? And secondly, might AI have a right to life the same way living things do?

I welcome your comments on these questions, but I believe the answer to the former is “yes”. If you look at the Designs and Patents Act 1988, copyright is an 'unregistered, automatic right ’’upon the creation and “fixation” of intellectual property (i.e. on the internet). Hence, it may be possible that AI has an intrinsic right for copyright simply by virtue of creating that music.

To address the second question, article 2 of the European Convention on Human rights states that “everyone’s right to life shall be protected by the law”. Can this ‘absolute right’ be applied to AI as well? If the AI has a clear purpose (and like Nietzsche, some would argue humans have no purpose), and it can be assumed its programming makes the AI efficient at performing it, maybe AI has a right to life to fulfil its purpose? The existence of this right certainly benefits society, so it makes sense to protect the AI’s existence for this reason. However, contribution to society cannot be the only factor in what makes existence worthy, and it is too human-centric to be applied across the board. It’s still interesting to ponder though if the right to life requires one to have a purpose beyond simply living.

Despite this example, the applications for this type of copyright are very limited today. Aiva is a good example since it actually goes through the process of creating something. Many other machine learning use cases we’re familiar with don’t create, they use pre-existing information and draw conclusions based on it, without creating something from nothing. However, with AI now being able to generate faces, music, even poetry – there is a point at which I believe we should acknowledge that the AI has made something sufficiently independently and that stealing from it is fundamentally wrong.

“But how can AI enforce their rights?”

If we agree that AI can have a very specific and small scope of rights, how do we enforce this? This question may be too technical and is impossible to answer without going too far outside the scope of my original question. However, if AI rights are realistically to be created, we need to consider an efficient way for them to be implemented.

There are two major issues with enforcing rights given to AI. Firstly, AI does not have sufficient access to the legal system to actually enforce them; and secondly, we may lack a practical reason why we would want to enforce those rights.

For the latter question, we can draw some comparison to animal rights. Animals, like AI, cannot create or enforce their own rights. Nevertheless, humans took it upon themselves after years of animal rights movements to protect them. A reason why we may have done so brings us back to legal moralism. Society’s desire to prevent what they believe is immoral, such as cruel treatment of animals, justifies the creation of animal rights because we believe it is inherently immoral to do so. Similarly, the inherent immorality of stealing, whoever or whatever from, may serve as a legal basis for protecting AI’s copyrights.

However, humans are empathetic creatures. Fundamentally, our desire for protection of certain things like animals may stem from our empathy toward them and the pain they may suffer without those rights. With AI, we may struggle to find empathy to protect the feelings of what are essentially lines of code. Hence, the two can be distinguished because for AI, the inherent immorality which society would want to criminalise is related only to the defendant’s act (i.e. stealing) rather than the unfair treatment of AI itself, whilst for animals it is both.

To summarise…

The question should not be whether AI is similar enough to humans to deserve having its rights enforced, but rather analyse what AI does and see whether rights are a reasonable and useful means to protect that. While AI’s rights may be justified out of moral principles in specific use cases like music copyright, at this point AI has not developed in a way that could have its rights practically and efficiently enforced. Immorality is not enough to grant AI rights in a way that is realistic to implement. Though, that does not mean those rights may not develop as AI creeps toward reaching levels of intelligence some may find uncomfortable. Today, it’s a strange concept for us to think AI deserves rights, but maybe our descendants will look back and think, "I can’t believe it took them that long.”

An earlier version of this article appeared in Oracle.

Further Reading:

https://www.youtube.com/watch?v=Emidxpkyk6o

https://study.com/academy/lesson/legal-moralism-definition-lesson.html

https://www.americanbar.org/groups/business_law/publications/blt/2017/07/05_boughman/

https://carrcenter.hks.harvard.edu/files/cchr/files/humanrightsai_designed.pdf

http://blogs.discovermagazine.com/crux/2017/12/05/human-rights-robots/#.XcwX9TL7TVo

https://phys.org/news/2018-10-artificial-intelligence-person-law.html

https://blog.goodaudience.com/5-reasons-why-robots-should-have-rights-4e62e8698571

https://www.independent.co.uk/news/science/ai-robots-human-rights-tech-science-ethics-a8965441.html

https://www.cps.gov.uk/legal-guidance/intellectual-property-crime

https://en.wikipedia.org/wiki/Ethics_of_artificial_intelligence

https://en.wikipedia.org/wiki/Natural_rights_and_legal_rights